Last month at the annual TV Shootout, hosted by Value Electronics, the top OLED TVs squared off against each other in a darkened room to see which offered the best overall picture quality. With all four TVs professionally calibrated and nine judges evaluating each set compared to professional broadcast reference monitors over the course of several hours, a new “King of TV” was crowned (the Sony BRAVIA 8 II), but not without some controversy.

In the weeks leading up to the event, all four of the TVs had been reviewed by tech journalists, TV reviewers and YouTube content creators and all four of the TVs had established themselves as strong performers in their own right. The TVs included in the event were the flagship OLED TVs available in the U.S.

The Top OLED TVs of 2025:

- LG G5 OLED TV – Amazon

- Panasonic Z95B OLED TV – Amazon

- Samsung S95F QD-OLED TV – Amazon

- Sony BRAVIA 8 II QD-OLED TV – Amazon

While three of the TVs were very tightly matched, and the final results had just a few hundredths of a point separating these top 3 scores, many attendees, judges (myself included), remote viewers and readers were surprised by the relatively poor showing by LG’s G5 TV in the event.

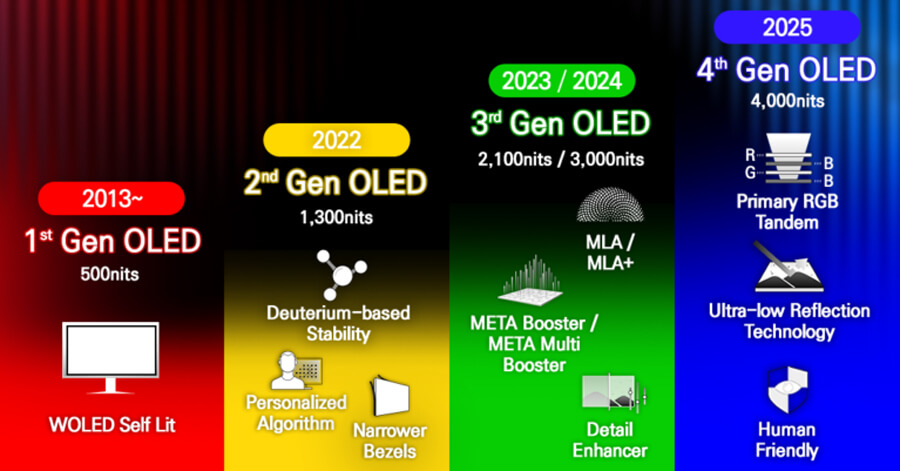

The OLED panel used in the LG G5 is a new “Primary RGB Tandem” or “Quad Stack” OLED panel that uses separate OLED layers for each primary color. Tests have verified that the G5 is capable of much higher peak brightness than previous LG OLED TVs and is even on par in brightness with many LED/LCD TVs. This is important because, while black levels and contrast are the typical strengths of OLED TVs, peak brightness is typically OLED’s Achilles heel. So improvements in peak luminance should have led to the LG performing well in any head-to-head competition.

But the LG struggled in the TV Shootout. Was it the specific types of tests used? Were test patterns favored more than real world content? Did professional calibration minimize the performance differences among the TVs on certain content? Many have expressed their opinions and theories online. But also many were interested to see whether the rankings of the TV Shootout would carry over in similar comparison events.

OLED vs. OLED – Round Two

When our friends over at Tech Radar had the opportunity to compare all four flagship OLED sets in house, they decided to perform a blind test of these same four TVs. However, they did their test a bit differently. Instead of professionally calibrating the sets and comparing them to reference monitors, they left the TVs in their “out of the box” settings: specifically “Cinema” or “Movie” mode for movies and TV shows and “Standard” mode for sports. And rather than subject the TVs to a combination of test patterns and real world content, they instead used only real world content, from low resolution DVDs, streaming and broadcast sources to higher quality 4K physical media.

Tech Radar’s OLED TV Showdown: A Rematch for the Ages

By testing “blind,” with no visible badges, the Tech Radar comparison would eliminate (or at least reduce) any brand bias among the judges. Of course, those in the audience with any familiarity with the various brands’ menus and operating systems could probably spot which TV was which (Google TV, Tizen, WebOS and FireTV all have very different looking menus). And the Samsung “Glare Free” matte screen looks quite different from the three other TVs’ more glossy screens. But at least there was no badge or logo visible on the TV itself.

Also, testing using “out of the box” settings would allow brighter sets like the Samsung S95F and LG G5 to “shine” (no pun intended) as their performance in SDR mode was not restricted to the confines of calibration to 100 nits of peak brightness. From what Sony told us at a press event last year, only around 10% or less of their customers ever adjust the picture settings on their TVs. So using default or out of the box settings may be more important to most TV buyers than how a TV compares when professionally calibrated.

Tech Radar’s Test Categories:

- Black Levels/Contrast

- Color

- Fast-Paced Action Movies

- Motion (Sports)

- Upscaling

Tech Radar’s scoring system was quite different from the Value Electronics TV Shootout. Rather than score each individual set from 1 to 5, compared to the reference monitor, Tech Radar’s 12 judges each picked a single winner for each testing category. Only the winner of each category got a point from each judge.

And the Winner Is…

After Tech Radar’s five rounds of testing were complete, the scores were tabulated and the results were shared. And these results were quite different from those of the TV Shootout. The LG G5, which had finished at the bottom of the TV Shootout tied for first place with the Samsung S95F. Meanwhile the Sony BRAVIA II, which finished on top of the TV Shootout, finished at the bottom of the Tech Radar showdown. The Sony TV’s relatively lower peak brightness definitely put it at a disadvantage compared to the others in out of the box testing. And the LG’s strong performance on upconverting a standard DVD to 4K resolution allowed it to sweep that entire category.

While the LG G5 OLED TV won in the upconversion test, and tied with the Panasonic Z95B for the motion/sports testing, the Samsung S95F got the most votes in the black level, color and fast paced action scenes. And its anti-glare matte finish made it popular for bright room viewing.

They’re All Winners?

It’s important to keep one thing in mind: even the lowest performer in this test (the Sony BRAVIA 8 II) has earned rave reviews from both consumers and professional TV reviewers. Tech Radar themselves ranked the Sony BRAVIA 8 II at 4.5 stars (out of 5). With rich deep blacks and outstanding color performance, OLED TVs continue to be favored among TV shoppers looking for the best, most accurate picture performance. Compared to OLED TVs of previous years, even the Sony is capable of much higher peak brightness levels, though it can’t match the brightness of the LG G5, Samsung S95F or Panasonic Z95B.

Full results and details from the Tech Radar OLED TV Showdown are available here:

Flagship OLED TV Showdown 2025 at Tech Radar

The Bottom Line

As I mentioned when covering the TV Shootout, no TV is perfect, and no TV comparison event is perfect either. The TV Shootout favors ultimate accuracy in picture performance compared to a broadcast reference monitor and levels the playing field with professional picture calibration. Tech Radar’s comparison event had the benefit of being a “blind” comparison, but used a more subjective methodology asking each judge to vote on which image they thought was “best” on each set of tests using standard out-of-the-box picture settings. Prospective buyers should check out the individual scores of each event and pick their own “winner” given the performance of each set on the tests that matter most to them. Or better yet, if you are able, get out there into a showroom and see them for yourselves.

Related Reading:

- TV Shootout 2025 Results: A New King of TV is Crowned for 2025

- Samsung S95F – The Glare-Free King? My Quick Take Review (Video)

- Hands on with LG’s C5, G5 and M5 OLED TVs for 2025

- This Dark Horse OLED TV May be the Best TV of 2025

- Podcast: 2025 TV Shootout Results Analyzed With B The Installer

Ian White

August 15, 2025 at 7:59 pm

Fascinating and surprising at the same time.

LG’s poor showing at the first shootout was a bit of a shock and I almost choked on my coffee after seeing a video on YouTube, that suggested that the deck was shuffled in Sony’s favor because the store sells so many of them.

BS.

The TV shootouts feel very balanced and professional when you look at the test criteria and how they are run.

Everyone’s room is different and all 4 of these TVs are very high end performers.

We’re very lucky to have so many great options.

Chris Boylan

August 15, 2025 at 10:00 pm

Yeah, as one of the judges at the event, I didn’t see any evidence of shenanigans. At these price points, Robert probably makes about the same amount of money selling any one of these TVs. The LG had significant issues on several of the tests but some of these issues were more evident in test patterns than in real world content. I think, if anything, some weighting of the scores might have made the composite scores more relevant and indicative of the things that people really notice and value in picture performance.

Robert Zohn

August 16, 2025 at 11:54 pm

Thank you Ian, all very well said!

Also know that although know that all 4 2025 OLED Flagship TVs performed very very close to each-other in every attribute of image quality.

These are all very excellent premium OLED TVs and you can select the best one for your use-case and liking.

ORT

August 16, 2025 at 3:08 am

While it is true (TWUE?!) that no TV is “perfect”, there is a perfect TV for every budget.

“Sheeeeit, ORT! That’s all you had to say!”

Good article, Chris! A real world test like this does indeed seem to be the way to go!

ORT

Chris Boylan

August 16, 2025 at 2:31 pm

ORTicus Finch!

It’s TWUE. It’s TWUE. There is a perfect TV for every budget. But even there, “perfect” is informed by personal choice. For about $1,000, you can get a basic OLED and enjoy those gorgeous deep blacks, or a nice MiniLED/LCD set that can put out a punchy picture even in a bright room. It’s all about preference.

Robert Zohn

August 16, 2025 at 11:59 pm

In our conversations with clients we are politely interviewing them to learn the about the room environment, all of the content they mostly watch, viewing distance, and much more.

Then we can make several suggestions with our best offers for them to consider.